➤ Gesture recognition applications

With the widespread machine learning technology, data’s importance shown. Datasets isn’t just provide the foundation for the architecture of AI system, but also determine the breadth and depth of applications. From anti-spoofing to facial recognition, to autonomous driving, perceived data collection and processing have become a prerequisites for achieving technological breakthroughs. Hence, high-quality data sources are becoming an important asset for market competitiveness.

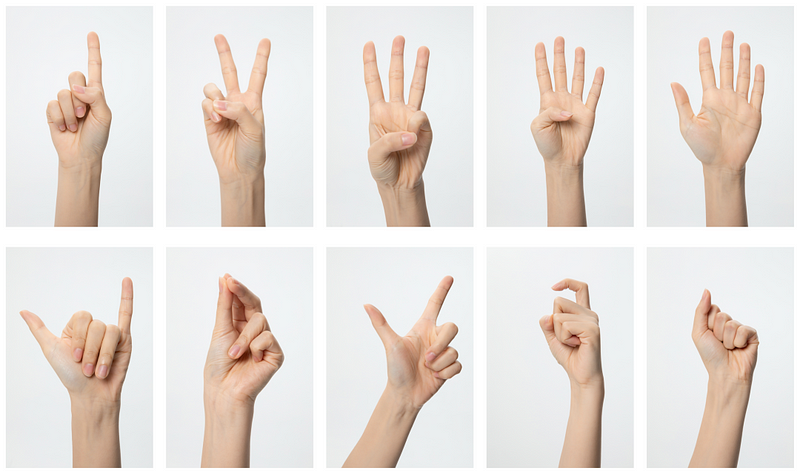

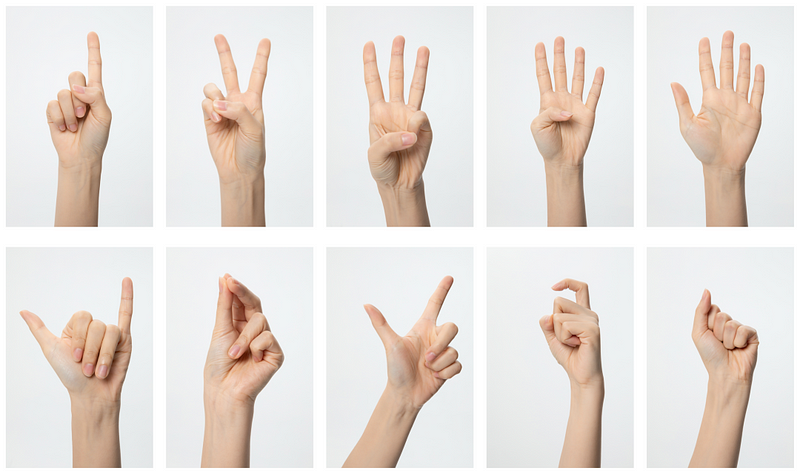

With the technological development, people’s lives are increasingly inseparable from smart devices. Born for convenience, human-computer interaction methods are becoming more and more diverse, including buttons, voice, etc., and because of the entire epidemic environment, a more convenient, more hygienic and more suitable for people’s non-contact interaction method — gesture recognition is right. With the further development, gesture operations that are highly graphic and actionable will be closely related to people’s lives.

Gesture recognition has a wide range of application scenarios. Common application scenarios include live broadcast interaction, smart home, smart cockpit, and sign language translation.

Live & Online Courses

Interact with the host or teacher, for example, than ok means received, the comparison number means correct, and the heart means thanks, etc. Or pose a specific gesture to the camera, and corresponding special effects will appear, bringing a rich interactive experience different from the past.

![]()

Smart Home

➤ Gesture Recognition: Tasks & Solutions

Interact with smart home devices, for example, use the left or right swipe gesture to simulate the remote control to change the channel, adjust the temperature of the air conditioner, raise the temperature with the finger up, lower the temperature with the finger down, and make a fist to turn off, etc.

Smart Cockpit

Interacting with in-vehicle AI devices, users can answer, reject calls, control volume, or turn pages through a series of actions of waving left and right, tapping and swiping in the air, drawing circles with fingers, and dragging two fingers in parallel or diagonally. Query interactive interface information, zoom the map page, rotate the camera view, etc.

Sign Language Interpretation

Through natural language processing technology, the sign language words typed by deaf people are reversed in order, converted into fluent Chinese sentences, automatically translated between text and sign language, and displayed in text or voice to meet the interactive needs of deaf people.

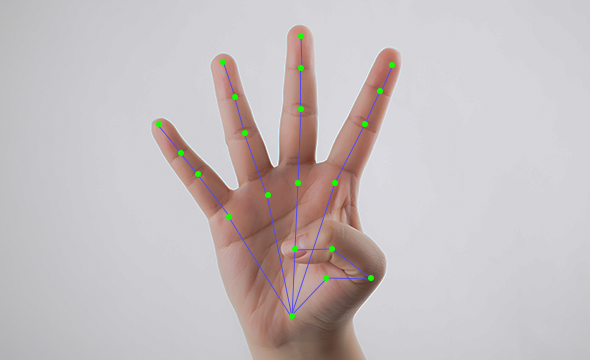

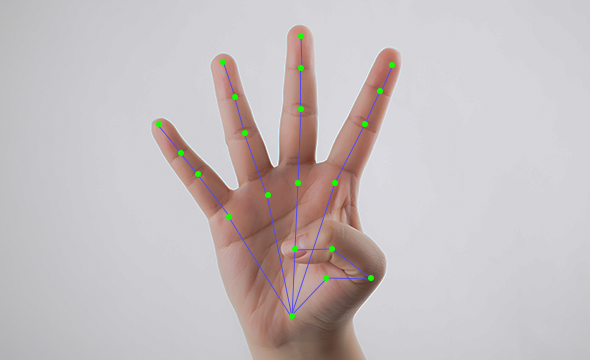

Gesture Recognition Data Annotation

Gesture recognition is based on different algorithm accuracy requirements, and usually includes two labeling methods: detection frame + gesture category label annotation and hand 21 key points + hand category label. Among them, the detection box + gesture category annotation is suitable for the needs of simple gestures and limited budget. For complex gestures and high-precision recognition requirements, the labeling method of 21 key points of the hand + gesture category is usually selected. The 21 points of the hand include selecting the key parts and joint points of the hand. By marking these 21 points, all the action forms of the hand can be abstracted.

Difficulties in Gesture Recognition

Based on the actual situation, the gesture recognition task has the following three difficulties.

There are many types of gestures: the human hand is very flexible and can make many different static and dynamic gestures. The recognition of some similar gestures puts forward higher requirements for the algorithm.

Serious occlusion: When people make different gestures, it will cause serious occlusion of key points of the hand, and the occlusion will be more serious when doing two-handed gestures (such as clasping fists), which will cause greater difficulty in the feature extraction of the algorithm and the prediction of invisible points.

Nexdata Gesture Recognition Data Solutions

According to the task requirements and difficulties of gesture recognition, Nexdata has carried out targeted design from the data level, subdivided the commonly used gesture recognition requirements in the industry into general static gestures, general dynamic gestures and sign language gestures, and produced corresponding data sets respectively.

180,718 Images — Sign Language Gestures Recognition Data

180,718 Images — Sign Language Gestures Recognition Data. The data diversity includes multiple scenes, 41 static gestures, 95 dynamic gestures, multiple photographic angles, and multiple light conditions. In terms of data annotation, 21 landmarks, gesture types, and gesture attributes were annotated. This dataset can be used for tasks such as gesture recognition and sign language translation.

314,178 Images 18_Gestures Recognition Data

➤ Nexdata's gesture recognition data

314,178 Images 18_Gestures Recognition Data. This data diversity includes multiple scenes, 18 gestures, 5 shooting angels, multiple ages and multiple light conditions. For annotation, gesture 21 landmarks (each landmark includes the attribute of visible and visible), gesture type and gesture attributes were annotated. This data can be used for tasks such as gesture recognition and human-machine interaction.

558,870 Videos — 50 Types of Dynamic Gesture Recognition Data

558,870 Videos — 50 Types of Dynamic Gesture Recognition Data. The collecting scenes of this dataset include indoor scenes and outdoor scenes (natural scenery, street view, square, etc.). The data covers males and females. The age distribution ranges from teenager to senior. The data diversity includes multiple scenes, 50 types of dynamic gestures, 5 photographic angles, multiple light conditions, different photographic distances. This data can be used for dynamic gesture recognition of smart homes, audio equipments and on-board systems.

Relying on its own data advantages and rich data processing experience, the gesture recognition data set launched by Nexdata provides assistance for the widespread application of gesture recognition technology.

End

If you need data services, please feel free to contact us at info@nexdata.ai.

Data isn’t only the foundation of artificial intelligence system, but also the driving force behind future technological breakthroughs. As all fields become more and more dependent on AI, we need to innovate methods on data collection and annotation to cope with growing demands. In the future, data will continue to lead AI development and bring more possibilities to all walks of life.

Previous

Previous Next

Next