➤ In - vehicle behavior recognition

Recently, AI technology’s application covers many fields, from smart security to autonomous driving. And behind every achievement is inseparable from strong data support. As the core factor of AI algorithm, datasets aren’t just the basis for model training, but also the key factor for improving mode performance, By continuously collecting and labeling various datasets, developer can accomplish application with more smarter, efficient system.

The in-vehicle behavior recognition task aims to identify the behavior of drivers and passengers in the vehicle, and provide technical support for improving the experience of drivers and passengers.

For different cockpit behaviors, the objects to be recognized are different, so the annotation methods are different. Typical annotation methods include face key point annotation, gesture key point annotation, and object & behavior annotation.

➤ Cockpit behavior recognition datasets

● Human Face: This type of labeling method is used to identify face-related behaviors, such as fatigue driving, sight shift, etc. The labeling form is face key points and behavior attributes.

● Human Body & Objects: This type of labeling method is used to identify behaviors related to human body and objects, such as smoking identification, driving drinking water, driving a phone call, etc. The labeling form is object and hand bounding box and behavior attributes.

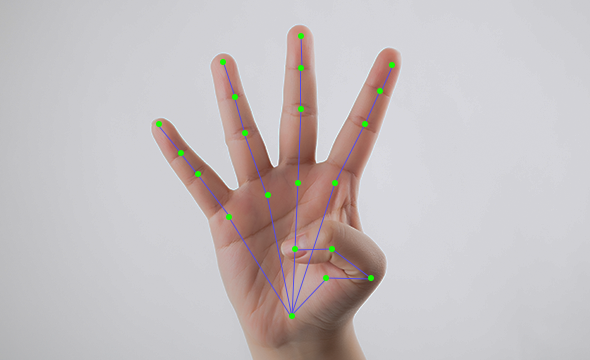

● Gestures: This labeling method is used to identify gesture actions, such as different finger pointing, palm movement, finger sliding, etc. The labeling form is gesture key points and gesture attributes.

Based on the actual situation, the cockpit behavior recognition task has the following three difficulties:

Behavior: There are many types of cockpit behaviors, and some behaviors have a certain degree of subjectivity (such as motion sickness, fatigue driving, etc.), making it difficult for algorithms to identify;

Lighting: The car will face strong light interference from different directions during the driving process, resulting in uneven illumination of faces, human bodies, objects and other targets. In addition, there is insufficient light at night. In the case of no lights in the car, the ordinary color lens cannot capture enough identification information, and the infrared lens is needed to assist;

Performance: The vehicle scene is different from the laboratory scene. The power consumption and computing power of the equipment need to be controlled to a certain extent, and the accuracy requirement of algorithm recognition is very high. Therefore, how to miniaturize the model and keep the high algorithm accuracy is an important research direction.

According to the task requirements and difficulties of behavior recognition, Nexdata has developed series of datasets. All data is collected with proper authorization with the person being collected, and customers can use it with confidence.

● Multi-race — Driver Behavior Collection Data

The data includes multiple ages, multiple time periods and multiple races (Caucasian, Black, Indian). The driver behaviors includes dangerous behavior, fatigue behavior and visual movement behavior. In terms of device, binocular cameras of RGB and infrared channels were applied.

● Driver Behavior Collection Data

➤ Gesture Recognition Datasets

The data includes multiple ages and multiple time periods. The driver behaviors includes Dangerous behavior, fatigue behavior and visual movement behavior. In terms of device, binocular cameras of RGB and infrared channels were applied.

● Passenger Behavior Recognition Data

The data includes multiple age groups, multiple time periods and multiple races (Caucasian, Black, Indian). The passenger behaviors include passenger normal behavior, passenger abnormal behavior(passenger carsick behavior, passenger sleepy behavior, passenger lost items behavior). In terms of device, binocular cameras of RGB and infrared channels were applied.

● 18_Gestures Recognition Data

This data diversity includes multiple scenes, 18 gestures, 5 shooting angels, multiple ages and multiple light conditions. For annotation, gesture 21 landmarks (each landmark includes the attribute of visible and visible), gesture type and gesture attributes were annotated.

● 50 Types of Dynamic Gesture Recognition Data

The collecting scenes of this dataset include indoor scenes and outdoor scenes (natural scenery, street view, square, etc.). The data covers males and females (Chinese). The age distribution ranges from teenager to senior. The data diversity includes multiple scenes, 50 types of dynamic gestures, 5 photographic angles, multiple light conditions, different photographic distances.

End

If you want to know more details about the datasets or how to acquire, please feel free to contact us: info@nexdata.ai.

Data is the key to the success of artificial intelligence. We must strengthen data collection methods and data security to achieve more intelligent and efficient technical solutions. In a rapidly developing market, only by continuous innovate and optimize of artificial intelligence can we build a safer, more efficient and intelligent society. If you have data requirements, please contact Nexdata.ai at [email protected].

Previous

Previous Next

Next